前言 工作上的项目应用了surf计算特征。对surf进行初步的了解。

本篇参考链接:https://blog.csdn.net/u012483097/article/details/105369239/

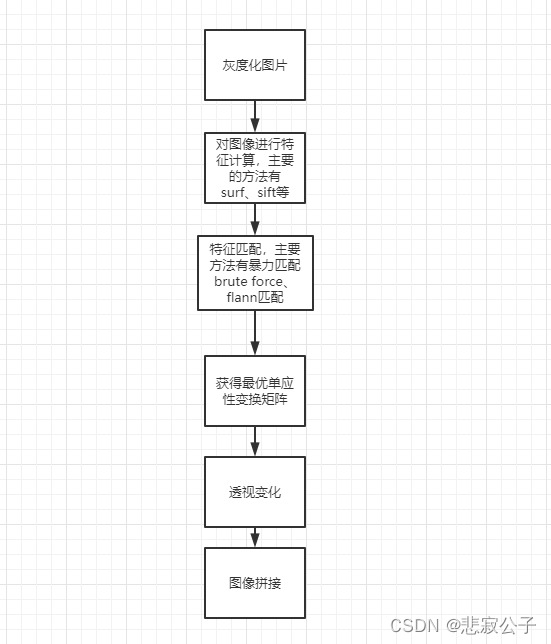

- 主要流程图

- 灰度化图片

img_path1 = r"../left.jpg" img_path2 = r"../right.jpg" img1 = cv2.imread(img_path1) img2 = cv2.imread(img_path2) # 转化为灰度图 img1_gray = cv2.cvtColor(img1, cv2.COLOR_BGR2GRAY) img2_gray = cv2.cvtColor(img2, cv2.COLOR_BGR2GRAY) - surf 计算

surf = cv2.xfeatures2d.SURF_create() surf.create(1000) # 计算img1, img2 图像的关键点和匹配点 kp1, des1 = surf.detectAndCompute(img1_gray, None) kp2, des2 = surf.detectAndCompute(img2_gray, None)

- 特征选择与匹配

''' BFMatcher.knnMatch() 暴力匹配 FlannBasedMatcher.knnMatch() flann匹配 return : 这里的matches返回的是DMatch对象,DMatch有4个属性 distance: 两个匹配点的距离,距离越短,匹配程度越高 trainIdx: 训练描述符的索引 queryIdx: 查询描述符的索引 imgIdx: 训练图片的索引 ''' # ======================brute match============================# # 选择最优点 goods = [] img1points = [] img2points = [] if usemode == 'b': bf = cv2.BFMatcher() matches = bf.knnMatch(des1, des2, k=2) # ======================brute match end============================# # ======================knn match============================# elif usemode == 'k': kf = cv2.FlannBasedMatcher() matches = kf.knnMatch(des1, des2, 2) # ======================knn match end============================# # 特征匹配 temp = cv2.drawMatches(img1, kp1, img2, kp2, goods, None) if len(goods) != 0: for i in goods: # queryIdx 表示查询点的位置 trainIdx表示匹配训练的点 img1points.append(kp1[i.queryIdx].pt) img2points.append(kp2[i.trainIdx].pt) - 获取最优单应性变换矩阵

src_pts = np.float32([m for m in img1points]) dst_pts = np.float32([m for m in img2points]) # 在目标平面找需要匹配平面的最优单应性变换矩阵 H, _ = cv2.findHomography(dst_pts, src_pts, cv2.LMEDS) - 透视变换

img_warpPerspective = cv2.warpPerspective(img_right, H, (img_left.shape[1] + img_right.shape[1], min(img_left.shape[0],img_left.shape[0]))) - 图片拼接

# 直接将左右拼接起来 w = img_left.shape[1] img_warpPerspective[:, 0:w] = img_left -

- 结果

左图

右图

评论(0)

您还未登录,请登录后发表或查看评论