目录

- 数据预处理

- 算法流程

- 源码

- [1] 点云转深度图

- [2] 物品分割代码

数据预处理

RGB图像和深度图由Kinect 拍摄,需要转换为点云格式才能输入 PCL 点云库进行处理。为了便于后续的处理,我选择将其转换为有序点云而不是无序点云。代码见文末[1]。

有序点云中存在很多噪声,这些对于分割物体有较大的干扰,在后续的操作中需要进行滤波。

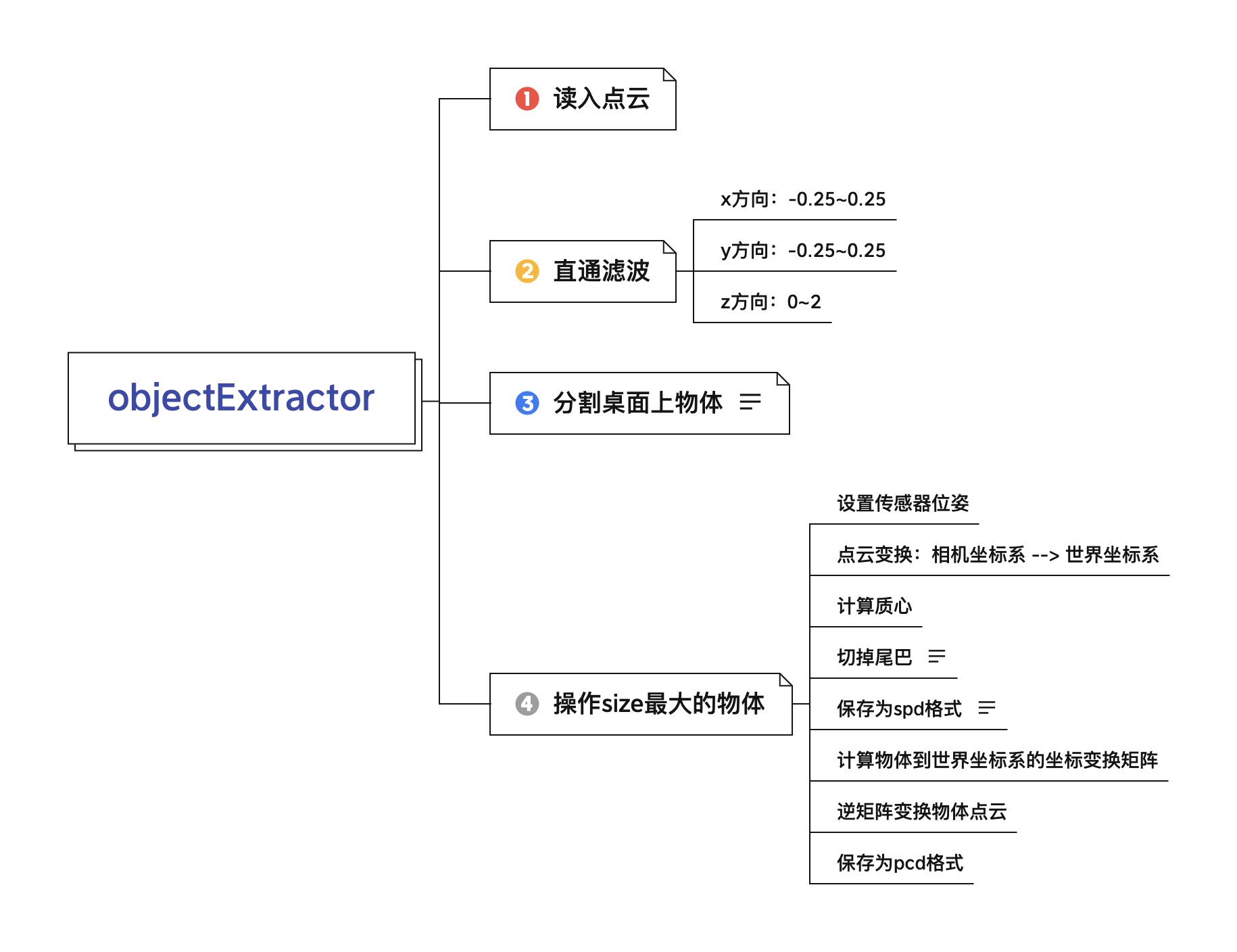

算法流程

整个算法的流程如下图所示:

分割结果存在一块缺失的部分,经在其他数据上测试,也会出现这种情况,可能是由于只有单幅点云,单一视角无法完全分割出完整的物品。

源码

[1] 点云转深度图

/*

* @Auther: 宇宙爆肝锦标赛冠军

* @Date: 2022-03-31 10:47:49

* @LastEditors: 宇宙爆肝锦标赛冠军

* @LastEditTime: 2022-04-16 11:17:11

* @Description: 用于将RGB和对应的深度图转换为 xyzrgba 格式有序点云

*/

#include <iostream>

#include <string>

using namespace std;

// OpenCV 库

#include <opencv2/core/core.hpp>

#include <opencv2/highgui/highgui.hpp>

// PCL 库

#include <pcl/io/pcd_io.h>

#include <pcl/point_types.h>

// 定义点云类型

typedef pcl::PointXYZRGBA PointT;

typedef pcl::PointCloud<PointT> PointCloud;

// 相机内参

const double camera_factor = 1000;

const double camera_cx = 314.461;

const double camera_cy = 243.868;

const double camera_fx = 615.316;

const double camera_fy = 614.77;

int main(int argc, char *argv[])

{

// 读取./data/rgb.png和./data/depth.png,并转化为点云

// 图像矩阵

cv::Mat rgb, depth;

rgb = cv::imread(argv[1]); // rgb 图像是8UC3的彩色图像

depth = cv::imread(argv[2], -1); // depth 是16UC1的单通道图像,注意flags设置-1,表示读取原始数据不做任何修改

// 点云变量

// 使用智能指针,创建一个空点云。这种指针用完会自动释放。

PointCloud::Ptr cloud(new PointCloud);

// 遍历深度图

for (int m = 0; m < depth.rows; m++)

for (int n = 0; n < depth.cols; n++)

{

// 获取深度图中(m,n)处的值

ushort d = depth.ptr<ushort>(m)[n];

PointT p;

// 计算这个点的空间坐标

p.z = double(d) / camera_factor;

p.x = (n - camera_cx) * p.z / camera_fx;

p.y = (m - camera_cy) * p.z / camera_fy;

// 从rgb图像中获取它的颜色

// rgb是三通道的BGR格式图,所以按下面的顺序获取颜色

p.b = rgb.ptr<uchar>(m)[n * 3];

p.g = rgb.ptr<uchar>(m)[n * 3 + 1];

p.r = rgb.ptr<uchar>(m)[n * 3 + 2];

// 把p加入到点云中

cloud->points.push_back(p);

}

// 设置并保存点云

cloud->height = depth.rows;

cloud->width = depth.cols;

cout << "point cloud size = " << cloud->points.size() << endl;

cloud->is_dense = false;

pcl::io::savePCDFile("result.pcd", *cloud);

// 清除数据并退出

cloud->points.clear();

cout << "Point cloud saved." << endl;

return 0;

}

[2] 物品分割代码

/*

* @Auther: 宇宙爆肝锦标赛冠军

* @Date: 2022-03-31 09:54:41

* @LastEditors: 宇宙爆肝锦标赛冠军

* @LastEditTime: 2022-03-31 14:41:23

* @Description: 对桌面上的物品进行分割

*/

#include <pcl/features/moment_of_inertia_estimation.h>

#include <pcl/io/pcd_io.h>

#include <pcl/filters/passthrough.h>

#include <pcl/filters/extract_indices.h>

#include <pcl/PointIndices.h>

#include <pcl/common/transforms.h>

#include <pcl/point_types.h>

#include <pcl/apps/impl/dominant_plane_segmentation.hpp>

#include <pcl/visualization/cloud_viewer.h>

#include <pcl/features/moment_of_inertia_estimation.h>

#include <vector>

using namespace std;

using namespace pcl;

typedef pcl::PointXYZRGBA PointT;

typedef vector<pcl::PointCloud<PointT>::Ptr> PointCloudVector;

typedef boost::shared_ptr<PointCloudVector> PointCloudVectorPtr;

const double depthK[] = { 418.7550354003906, 0.0, 427.534423828125,

0.0, 418.7550354003906, 240.6034698486328,

0.0, 0.0, 1.0 };

const double colorK[] = { 917.871337890625, 0.0, 646.165771484375,

0.0, 918.4445190429688, 379.54443359375,

0.0, 0.0, 1.0 };

pcl::PointCloud<PointT>::Ptr transformCamera2World(pcl::PointCloud<PointT>::Ptr cloud_in)

{

auto p = cloud_in->sensor_origin_;

auto o = cloud_in->sensor_orientation_;

Eigen::Matrix4f trans2 = Eigen::Matrix4f::Identity();

trans2.block(0, 0, 3, 3) = o.toRotationMatrix();

trans2.block(0, 3, 3, 1) = Eigen::Vector3f(p.x(), p.y(), p.z());

pcl::PointCloud<PointT>::Ptr cloud_out(new pcl::PointCloud<PointT>);

pcl::transformPointCloud(*cloud_in, *cloud_out, trans2);

return cloud_out;

}

pcl::PointXY framePC2RGB(PointT point)

{

pcl::PointXY pout;

pout.x = point.x * colorK[0] / point.z + colorK[2];

pout.y = point.y * colorK[4] / point.z + colorK[5];

return pout;

}

pcl::PointXY framePC2D(PointT point)

{

pcl::PointXY pout;

pout.x = point.x * depthK[0] + point.z * depthK[2];

pout.y = point.y * depthK[4] + point.z * depthK[5];

return pout;

}

class ObjectExtractor

{

protected:

public:

// 直通滤波,去除背景

PointCloud<PointT>::Ptr passThrough(PointCloud<PointT>::Ptr cloud_in)

{

cout << "passThrough..." << endl;

PointCloud<PointT>::Ptr cloud_out(new pcl::PointCloud<PointT>);

// std::vector<int> nan_indices;

// removeNaNFromPointCloud(*cloud_in,*cloud_out,nan_indices);

PassThrough<PointT> pass;

pass.setInputCloud (cloud_in);

pass.setFilterFieldName ("z");// Z轴

pass.setFilterLimits (0, 2);// 范围

pass.setKeepOrganized(true);

pass.filter (*cloud_out);

const double xylimit = 0.25;

pass.setInputCloud (cloud_out);

pass.setFilterFieldName ("x");// Z轴

pass.setFilterLimits (-xylimit, xylimit);// 范围

pass.setKeepOrganized(true);

pass.filter (*cloud_out);

pass.setInputCloud (cloud_out);

pass.setFilterFieldName ("y");// Z轴

pass.setFilterLimits (-xylimit, xylimit);// 范围

pass.setKeepOrganized(true);

pass.filter (*cloud_out);

cout << "PointCloud after filtering has: " << cloud_out->points.size () << " data points." << endl;

// pcl::io::savePCDFile(save_dir + "/" + string("1-pass-through-filtered.pcd"), *cloud_out);

// viewPCD(cloud_out, "cutted pcd");

return cloud_out;

}

// 物体分割

PointCloudVectorPtr segementOnTableCluster(PointCloud<PointT>::Ptr cloud_in)//对桌面上物体进行分割

{

cout << "segementOnTableCluster..." << endl;

PointCloudVectorPtr clouds_out(new PointCloudVector);

apps::DominantPlaneSegmentation<PointT> dps;

dps.setInputCloud (cloud_in);

dps.setMaxZBounds (1.5); //z方向范围

dps.setObjectMinHeight (0.01);

dps.setMinClusterSize (500);

dps.setWSize (5);

dps.setDistanceBetweenClusters (0.02f);

dps.setSACThreshold(0.005);

std::vector<pcl::PointIndices> indices_;//分割物体索引

Eigen::Vector4f table_plane_;//桌面方程参数abcd

//dps.setDownsamplingSize (0.005f);

dps.compute_fast (*clouds_out);

dps.getIndicesClusters (indices_);

dps.getTableCoefficients (table_plane_);

return clouds_out;

}

//计算重心

Eigen::Vector4f computeCentroid(pcl::PointCloud<PointT>::Ptr cloud_in)

{

Eigen::Vector4f centroid;

pcl::compute3DCentroid(*cloud_in, centroid);

std::cout << "The centroid are: ("

<< centroid[0] << ", "

<< centroid[1] << ", "

<< centroid[2] << ")." << std::endl;

return centroid;

}

pcl::PointCloud<PointT>::Ptr loadPCD(string pcd_file)

{

pcl::PointCloud<PointT>::Ptr cloud (new pcl::PointCloud<PointT>);

if (pcl::io::loadPCDFile<PointT>(pcd_file, *cloud) == -1)

{

std::cout << "Cloud reading failed." << std::endl;

return nullptr;

}

return cloud;

}

void viewPCD(pcl::PointCloud<PointT>::Ptr cloud_in, string name = "")

{

pcl::visualization::CloudViewer viewer(name);

viewer.showCloud(cloud_in);

while (!viewer.wasStopped());

}

void savePCD(pcl::PointCloud<PointT>::Ptr cloud, string fpout)

{

pcl::io::savePCDFile(fpout, *cloud);

}

void saveSPD(pcl::PointCloud<PointT>::Ptr cloud, string fpout)

{

ofstream fout(fpout);

for (auto &point : cloud->points)

{

fout << point.x << " " << point.y << " " << point.z << " "

<< (uint)point.r << " " << (uint)point.g << " " << (uint)point.b << endl;

}

fout.close();

}

// 去掉由于相机失真造成的“尾巴”,方向应随实际情况而定,本实验中相机是沿y轴正方向的。

pcl::PointCloud<PointT>::Ptr cutTail(pcl::PointCloud<PointT>::Ptr cloud, Eigen::Vector4f centroid)

{

pcl::PointCloud<PointT>::Ptr cloud_out(new pcl::PointCloud<PointT>);

for (auto &point : cloud->points)

{

if (point.z > centroid.z() || point.y < centroid.y() + 0.02)

cloud_out->push_back(point);

}

return cloud_out;

}

// 计算物体到世界坐标系的坐标变换矩阵

Eigen::Matrix4f computeH_model2world(pcl::PointCloud<PointT>::Ptr cloud, vector<double> modelParams, float & factor)

{

std::vector <float> moment_of_inertia;

std::vector <float> eccentricity;

PointT min_point_OBB;

PointT max_point_OBB;

PointT position_OBB;

Eigen::Matrix3f rotational_matrix_OBB;

float major_value, middle_value, minor_value;

Eigen::Vector3f major_vector, middle_vector, minor_vector;

Eigen::Vector3f mass_center;

// 计算物体参数

pcl::MomentOfInertiaEstimation<PointT> feature_extractor;

feature_extractor.setInputCloud(cloud);

feature_extractor.compute ();

feature_extractor.getMomentOfInertia (moment_of_inertia);

feature_extractor.getEccentricity (eccentricity);

feature_extractor.getOBB (min_point_OBB, max_point_OBB, position_OBB, rotational_matrix_OBB);

feature_extractor.getEigenValues (major_value, middle_value, minor_value);

feature_extractor.getEigenVectors (major_vector, middle_vector, minor_vector);

feature_extractor.getMassCenter (mass_center);

// 这部分的坐标关系把我自己都绕晕了,总之结果是对的。(我不懂但它跑起来了.jpg)

Eigen::Matrix4f H_model2world;

H_model2world.setIdentity();

H_model2world.block(0, 0, 3, 1) = major_vector; // x为最长轴

H_model2world.block(0, 1, 3, 1) = middle_vector;

H_model2world.block(0, 2, 3, 1) = minor_vector;

// H_model2world.block(0, 0, 3, 3) = rotational_matrix_OBB;

// H_model2world.block(0, 3, 3, 1) = position_OBB.getVector3fMap() + min_point_OBB.getVector3fMap();

Eigen::Vector4f max_point = H_model2world.transpose() * max_point_OBB.getVector4fMap();

Eigen::Vector4f min_point = H_model2world.transpose() * min_point_OBB.getVector4fMap();

cout << "max_point_OBB = " << max_point_OBB.getVector3fMap().transpose() << endl;

cout << "min_point_OBB = " << min_point_OBB.getVector3fMap().transpose() << endl;

cout << "max_point = " << max_point.transpose() << endl;

cout << "min_point = " << min_point.transpose() << endl;

H_model2world.block(0, 3, 3, 1) = position_OBB.getVector3fMap();

if (max_point.x() < 0)

{

H_model2world.block(0, 0, 3, 3) = H_model2world.block(0, 0, 3, 3) * Eigen::AngleAxisf(M_PI, Eigen::Vector3f::UnitZ()).matrix();

max_point = H_model2world * max_point_OBB.getVector4fMap();

}

// 这里需要补充一个方法确定物体的方向,理论上这段代码无法保证方向是正确的

Eigen::Matrix4f H_tmp;

H_tmp.setIdentity();

H_tmp.block(0, 3, 3, 1) = min_point_OBB.getVector3fMap();

H_model2world = H_model2world * H_tmp;

// 实物与模型大小不同,因此计算一个缩放倍数

Eigen::Vector3f max_point3 = max_point_OBB.getVector3fMap();

float d = max_point3.maxCoeff() * 2;

factor = 1/d;

return H_model2world;

}

static double gaussianF(double x, double u, double sigma2)

{

return 1.0 / sqrt(2 * 3.1415927 * sigma2) * expf(-pow(fabs(x - u), 2) / (2 * sigma2));

}

static double GMMF(double x, vector<double> weights, vector<double> means, vector<double> sigma2s)

{

double prob = 0;

for (int i = 0; i < means.size(); ++i)

prob += gaussianF(x, means[i], sigma2s[i]) * weights[i];

return prob;

}

pcl::PointCloud<PointT>::Ptr renderGMM(pcl::PointCloud<PointT>::Ptr cloud, float factor, vector<double> weights, vector<double> means, vector<double> sigma2s)

{

pcl::PointCloud<PointT>::Ptr cloud_out(new pcl::PointCloud<PointT>);

// int i = 0;

// ofstream fout("test.txt");

for (auto point : cloud->points)

{

// ++i;

float prob = GMMF(point.x * factor, weights, means, sigma2s);

// fout << prob << " ";

// if (!(i%100))

// cout << "point.x = " << point.x * factor << endl;

prob = (prob/2 < 1) ? prob/2 : 1;

Eigen::Vector3f white(255, 255, 255);

Eigen::Vector3f red(255, 0, 0);

Eigen::Vector3f srcColor(point.getRGBVector3i()[0], point.getRGBVector3i()[1], point.getRGBVector3i()[2]);

Eigen::Vector3f color = white * (1-prob) + red * prob;

// cout << "color = " << color.x() << ", " << color.y() << ", " << color.z() << endl;

point.r = color.x();

point.g = color.y();

point.b = color.z();

cloud_out->push_back(point);

}

// fout.close();

return cloud_out;

}

};

ObjectExtractor *extractor;

int main(int argc, char **argv)

{

clock_t start_time, end_time;

start_time = clock();

pcl::PointCloud<PointT>::Ptr cloud(new PointCloud<PointT>);

cloud = extractor->loadPCD(argv[1]);

cout << "读取点云: " << cloud->width << ", " << cloud->height << endl;

auto p = cloud->sensor_origin_;

auto o = cloud->sensor_orientation_;

cloud = extractor->passThrough(cloud);

// extractor->viewPCD(cloud, "passThrough.pcd");

extractor->savePCD(cloud, "passThrough.pcd");

// extractor->savePCD(cloud, req.outputDir + "/passThrough.pcd");

PointCloudVectorPtr objects;

objects = extractor->segementOnTableCluster(cloud);

cout << "共分割出:" << objects->size() << "个物体" << endl;

pcl::PointCloud<PointT>::Ptr object = (*objects)[0];

for (auto &object0 : *objects)

{

if (object0->size() > object->size())

object = object0;

}

object->sensor_origin_ = p;

object->sensor_orientation_ = o;

object = transformCamera2World(object);

// extractor->viewPCD(object);

auto centroid = extractor->computeCentroid(object);

object = extractor->cutTail(object, centroid);

// extractor->viewPCD(object);

// extractor->savePCD(object, req.outputDir + "/object.pcd");

extractor->savePCD(object, "object.pcd");

// extractor->saveSPD(object, "object.spd");

float factor;

vector<double> objectParams, Gaussian_Weights, Gaussian_Means, Gaussian_Sigma2s;

auto H_model2world = extractor->computeH_model2world(object, objectParams, factor);

PointCloud<PointT>::Ptr objectROI(new PointCloud<PointT>);

pcl::transformPointCloud(*object, *objectROI, H_model2world.inverse());

objectROI = extractor->renderGMM(objectROI, factor, Gaussian_Weights, Gaussian_Means, Gaussian_Sigma2s);

// extractor->viewPCD(objectROI);

extractor->savePCD(objectROI, "objectROI.pcd");

end_time = clock();

std::cout << "Total time: " << (double)(end_time - start_time) / CLOCKS_PER_SEC << "s" << std::endl;

return 0;

}

评论(2)

您还未登录,请登录后发表或查看评论